This post is based on material from Docker in Practice, available on Manning’s Early Access Program:

Get Going

1) Allow any registry insecurely

This post is going to talk about playing with Kubernetes using Docker. Setting up Kubernetes can be a bit of a pain, but fortunately there’s a fast way to play with it.

To allow applications to contact the registry internal to OpenShift, you will need to start your Docker daemon allowing insecure registries. For simplicity we’re going to allow any registry to be accessed.

Change your Docker daemon configuration script

DOCKER_OPTS="--insecure-registry 0.0.0.0/0"

or add the insecure-registry argument as above to the end of the existing uncommented DOCKER_OPTS line.

The file to change will depend on your distribution. systemd users will need to update /etc/sysconfig/docker; Ubuntu users /etc/default/docker

Once done, restart your docker daemon with eg:

sudo service docker restart

or

systemctl restart docker

2) Save some time by downloading some images in advance:

$ docker pull openshift/wildfly-8-centos

Run OpenShift

Run this:

$ docker run \

-d \

-v /var/run/docker.sock:/var/run/docker.sock \

--name openshift-origin-play \

--net=host \

--privileged \

dockerinpractice/openshift-origin-play start --loglevel=4 && \

docker exec -ti openshift-origin-play bash

You’re now in an OpenShift origin PaaS master and node.

Huh?

The above command’s a bit of a mouthful so let’s break it down.

docker run

Run up a container.

-d

Run the OpenShift origin server as a daemon.

-v /var/run/docker.sock:/var/run/docker.sock

Use the docker server on the host from within the container. This allows us to run docker from within the origin container.

--name openshift-origin-play

Give the container a name we can refer to; also, ensure that this can only be run one at a time (as each container name is unique on a host).

--net=host

Use the host’s network stack (ie don’t contain the network).

--privileged

Allow the container to run stuff with user privileged (well, we are just playing, right?)

dockerinpractice/openshift-origin-play

Use this as a base image. It’s the same as the openshift/origin image with a few things added for debugging and playing.

start &&

The default entrypoint (ie command) for this image is to run the openshift server. We pass the argument “start” to this to get the server to start up. If that’s successful…

docker exec -ti openshift-origin-play bash

we enter the container interactively (-ti) with the name we gave it early, running a bash process.

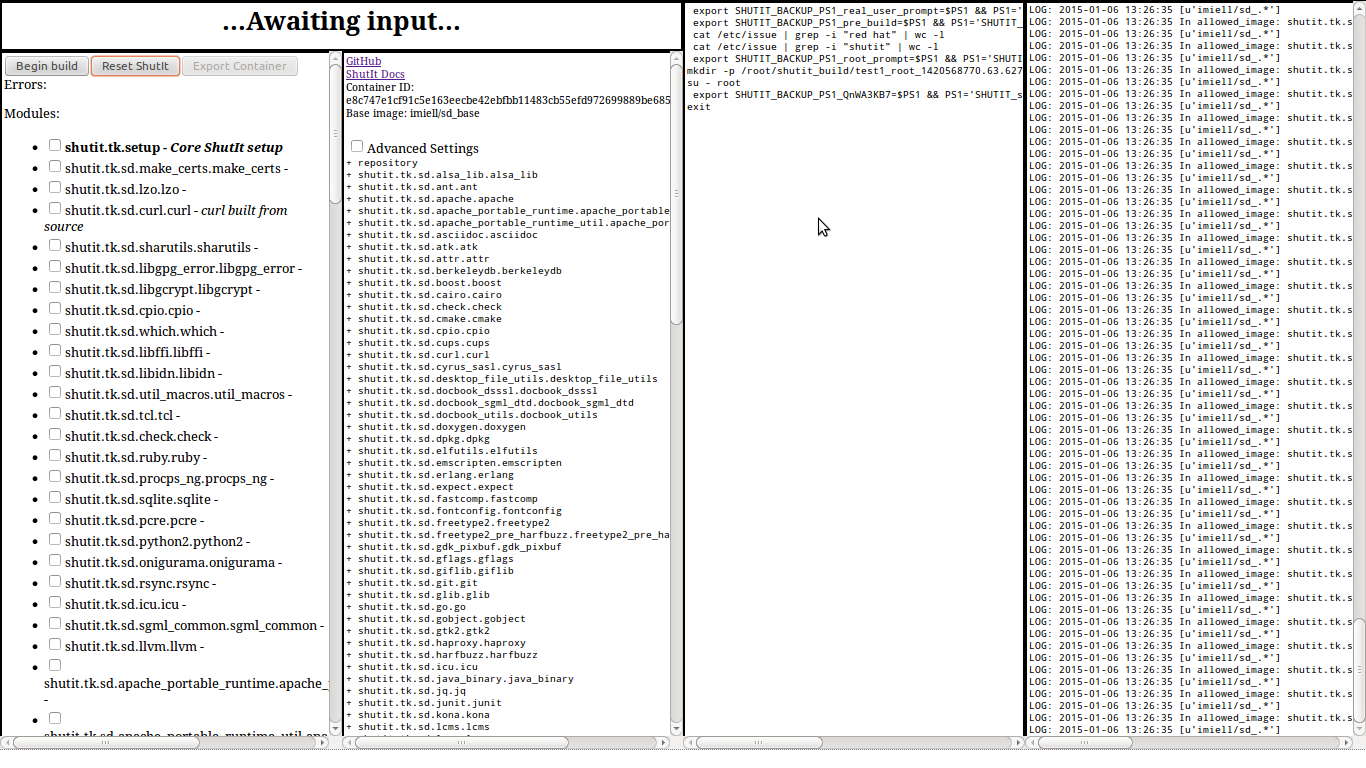

Look at the Console

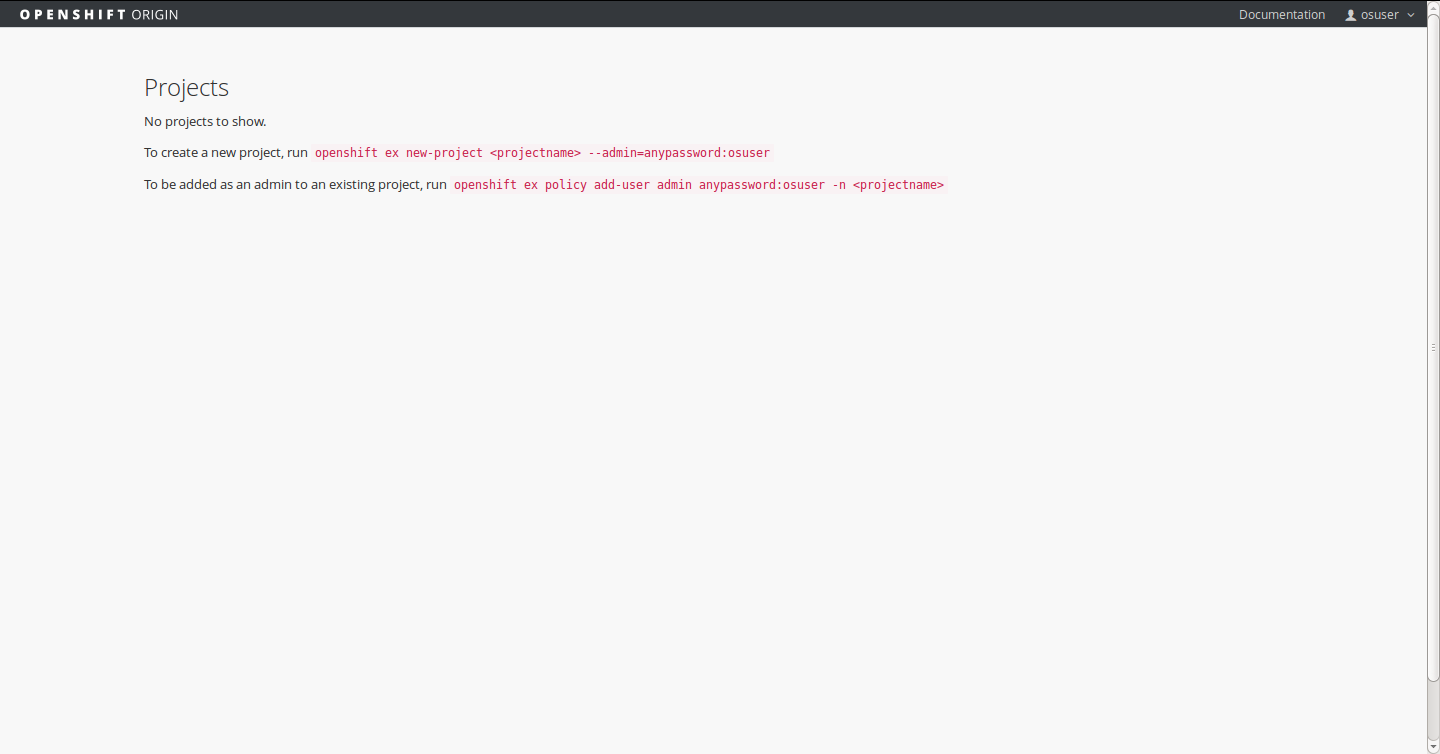

Visit your OpenShift console at: https://localhost:8443/console and login with the username osuser and password dockerinpractice.

Following the instructions there, let’s…

Start the Infrastructure

For convenience, and to avoid some troublesome issues we set a specific DNS server (we assume here that you have access to google’s DNS servers):

$ echo "nameserver 8.8.8.8" > /etc/resolv.conf

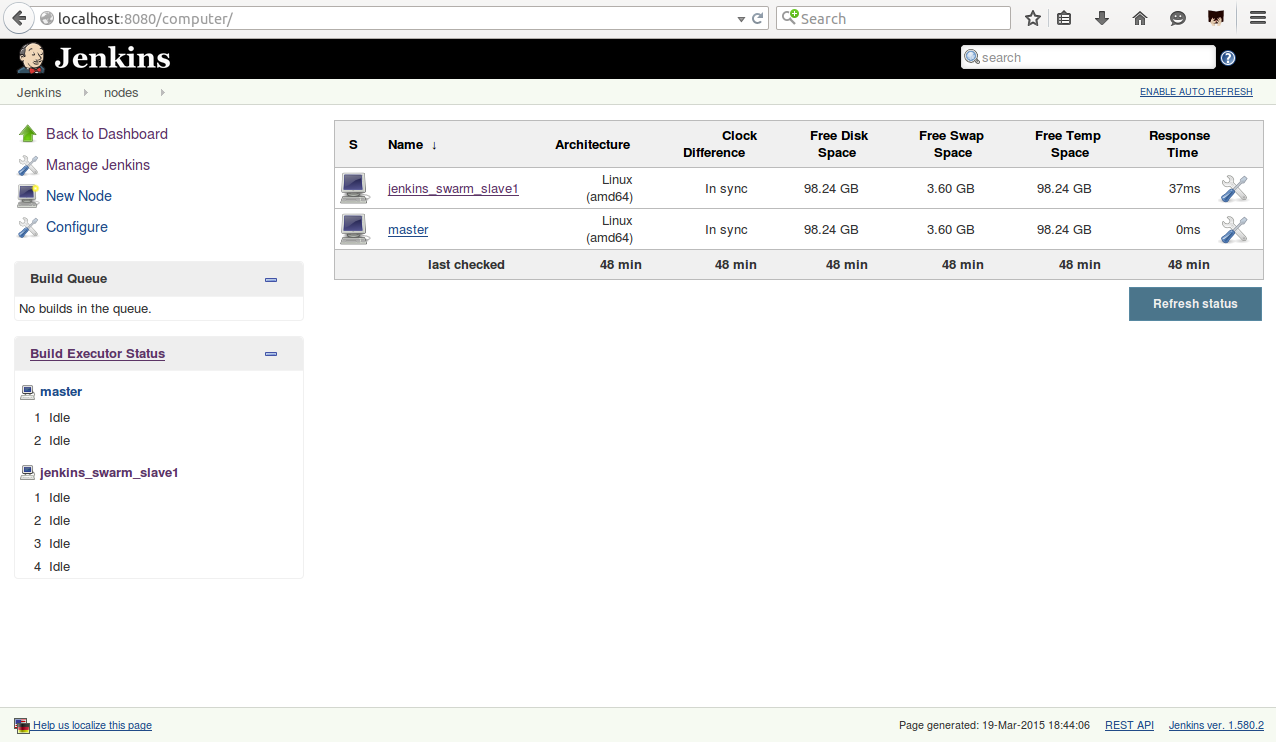

Set up the services OpenShift builds need:

$ openshift ex router --create --credentials=$KUBECONFIG $ openshift ex registry --create --credentials=$KUBECONFIG

Create a Project

In OpenShift, a project is a namespace for a collection of applications.

$ openshift ex new-project movieplex --admin=anypassword:osuser

Now, to create an application we need to login as the admin user for the project.

$ su - osuser $ osc login Please provide the server URL or just <enter> to use 'https://localhost:8443': The server uses a certificate signed by unknown authority. You can bypass the certificate check but it will make all connections insecure. Use insecure connections (strongly discouraged)? [y/N] y Authenticate for "openshift" Username: osuser Password: Logged into 'https://localhost:8443' as 'osuser'. Using project 'movieplex'. Welcome to OpenShift v3! Use 'osc --help' for a list of commands available. $ osc process -f https://raw.githubusercontent.com/ianmiell/javaee7-hol/master/application-template-jeebuild.json | osc create -f - $ osc start-build jee-sample-build

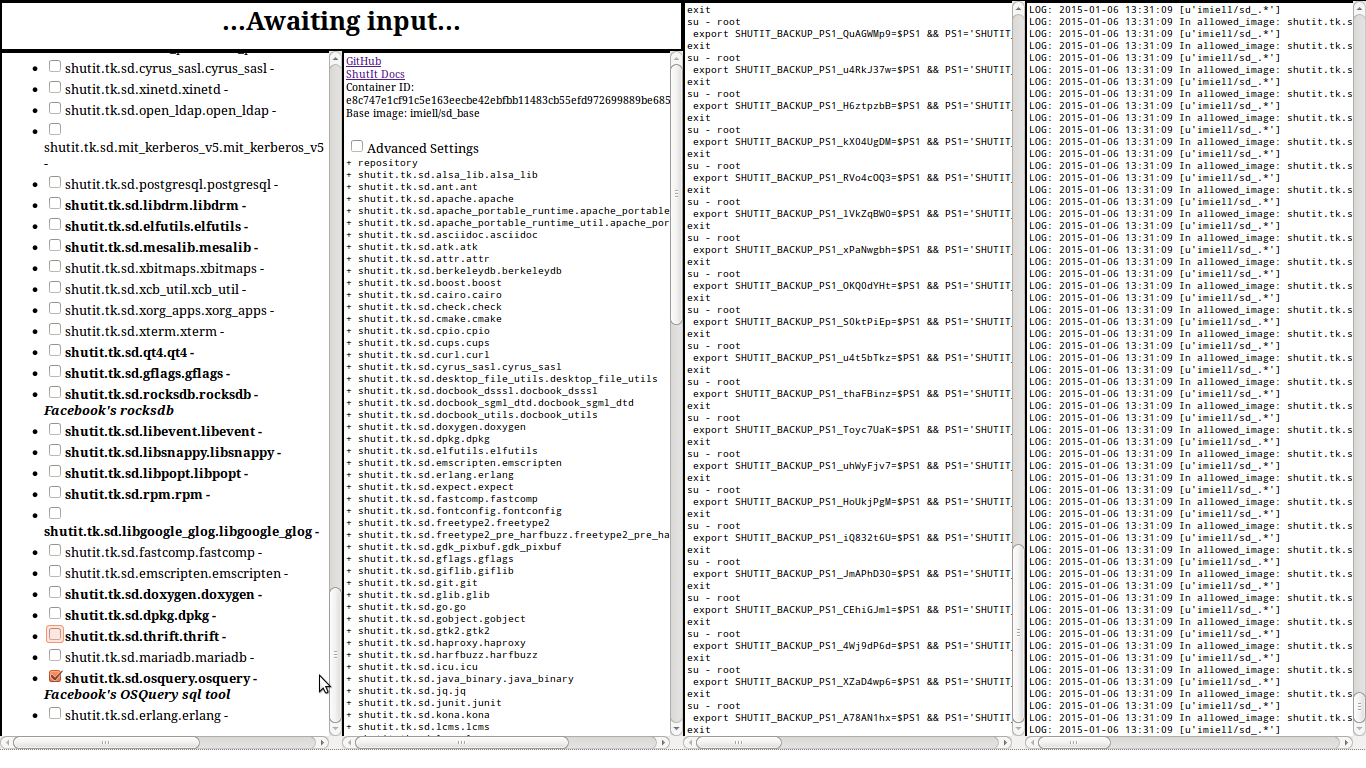

And wait a long time (about 15 minutes for me on my machine).

While waiting run this:

$ osc get build NAME TYPE STATUS POD jee-sample-build-1 STI Pending jee-sample-build-1

The build status will change to “Running”, and eventually “Finished”.

Then you can view the application:

$ osc get service NAME LABELS SELECTOR IP PORT frontend <none> name=frontend 172.30.17.59 8080 mysql <none> name=database 172.30.17.97 3306

We want to access the front end, so with the above output if you navigate to:

http://172.30.17.59:8080/movieplex7-1.0-SNAPSHOT/

you’ll see the movie app 🙂

More to Come

There’s a lot more to this (which I’ll blog on), but this gives a taste of how scriptable deployments can be with OpenShift.

Source

The code for the example is available here:

git clone https://github.com/docker-in-practice/openshift-origin-play