In part V of this series of posts I take a slight detour to show you a couple of ‘debug nightmares’ that DNS threw at me.

Previous posts were:

Anatomy of a Linux DNS Lookup – Part I

Anatomy of a Linux DNS Lookup – Part II

Anatomy of a Linux DNS Lookup – Part III

Anatomy of a Linux DNS Lookup – Part IV

Both bugs threw up surprises not seen in previous posts…

Landrush and VMs

I’m a heavy Vagrant user, which I use mostly for testing (mostly) Kubernetes clusters of various kinds.

It’s key for these setups to work that DNS lookup between the various VMs works smoothly. By default, each VM is addressable only by IP address.

To solve this, I use a vagrant plugin called landrush. This works by creating a tiny ruby DNS server on the host that runs the VMs. This DNS server runs on port 10053, and keeps and returns records for the VMs that are running. So, for example, you might have two VMs running (vm1 and vm2), and landrush will ensure that your DNS lookups for these hosts (eg vm1.vagrant.test and vm2.vagrant.test) will point to the right local IP address for that VM.

It does this by creating IPTables rules on the VM host and the VMs themselves. These IPTables rules divert DNS requests to the DNS server running on port 10053, and if there’s no match, it will re-route the request to the original DNS server specified in that context.

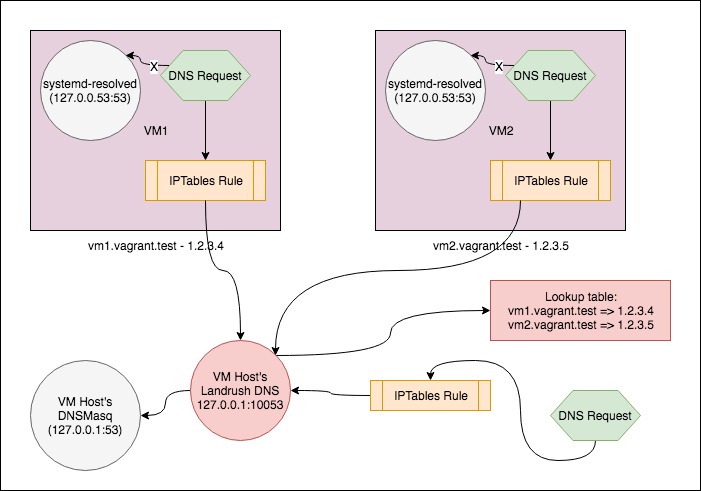

Here’s a diagram that might help visualise this:

vagrant-landrush DNS server

Above is a diagram that represents how Landrush DNS works with Vagrant. The box represents a host that’s running two Vagrant VMs (vm1 and vm2). These have the ipaddresses 1.2.3.4 and 1.2.3.5 respectively.

A DNS request on either vm is redirected from the host’s resolver (in this case systemd-resolved) to the host’s Landrush DNS server. This is achieved using an IPTables rule on the VM.

The Landrush DNS server keeps a small database of the host mappings to IPs given out by Vagrant and responds to any requests for vm1.vagrant.test or vm2.vagrant.test with the appropriate IP local address. If the request is for another address the request is forwarded on to the host’s configured DNS server (in this case DNSMasq).

Host lookups use the same IPTables mechanism to send DNS requests to the Landrush DNS server.

The Problem

Usually I use Ubuntu 16.04 machines for this, but when I tried 18.04 machines networking was failing on them:

$ curl google.com

curl: (6) Could not resolve host: google.comAt first I assumed the VM images themselves were faulty, but taking Landrush out of the equation restored networking fully.

Trying another tack, deleting the IPTables rule on the VM meant that networking worked also. So, mysteriously, the IPTables rule was not working. I tried stracing the two curl calls (working and not-working) to see what the difference was. There was a difference, but I had no idea why it might be happening.

As a next step I tried to take systemd-resolved and Landrush out of the equation (since that was new between 16.04 and 18.04). I did this by using different IPTables rules:

- Direct requests to google’s 8.8.8.8 DNS server rather than the Landrush failure (FAILED)

- Showed that Landrush wasn’t the problem

- Direct

/etc/resolv.confto a different address (changed127.0.0.53to9.8.7.6), and wire IPTables to Google’s DNS server (WORKED) - Direct

/etc/resolv.confto a different address (changed127.0.0.53to127.0.0.54), and wire IPTables to Google’s DNS server (FAILED)- Showed systemd-resolved not necessarily the problem

The fact that using 9.8.7.6 instead of 127.0.0.53 as a DNS server IP address led me to think that the fact that /etc/resolv.conf was pointed to a localhost address (ie one in the 127.0.0.* range) might be the problem.

A quick google led me here, which suggested that the problem was a sysctl setting:

sysctl -w net.ipv4.conf.all.route_localnet=1And all was fixed.

The gory detail of the debugging is here.

Takeaway

sysctl settings are yet another thing that can affect and break DNS lookup!

These and more such settings are listed here.

DNSMasq, UDP=>TCP and Large DNS Responses

The second bug threw up another surprise.

We had an issue in production where DNS lookups were taking a very long time within an OpenShift Kubernetes cluster.

Strangely, it only affected some lookups and not others. Also, the time taken to do the lookup was consistent. This suggested that there was some kind of timeout on the first DNS server requested, after which it fell back to a ‘working’ one.

We did manual requests using dig to the local DNSMasq server on one of the hosts that was ‘failing’. The DNS request returned instantly, so we were scratching our heads. Then a colleague pointed out that the DNS response was rather longer than normal, which rang a bell.

Soon enough, he came back with this rfc, (RFC5966), which states:

In the absence of EDNS0 (Extension Mechanisms for DNS 0) (see below), the normal behaviour of any DNS server needing to send a UDP response that would exceed the 512-byte limit is for the server to truncate the response so that it fits within that limit and then set the TC flag in the response header. When the client receives such a response, it takes the TC flag as an indication that it should retry over TCP instead.

which, to summarise, means that if the DNS response is over 512 bytes, then the DNS server will send back a truncated response, and should make another request over TCP rather than UDP.

We never fixed the root cause here, but suspected that DNSMasq was not correctly returning the TCP to the client requesting. We found a setting that specified which interface DNSMasq would run against. By limiting this to one interface, requests worked again.

From this, we reasoned there was a bug in DNSMasq where if it was listening on more than one interface, and the upstream DNS request resulted in a response bigger than 512, then the response never reaches the original requester.

Takeaway

Another DNS surprise – DNS can stop working if the DNS response is over 512 bytes and the DNS client request program doesn’t handle this correctly.

Summary

DNS in Linux has even more surprises in store and things to check when things don’t go your way.

Here we saw how sysctl settings and plain old-fashioned bugs in seemingly battle-hardened code can affect your setup.

And we haven’t covered caching yet…

If you like this, you might like one of my books:

Learn Git the Hard Way, Learn Terraform the Hard Way, Learn Bash the Hard Way

5 thoughts on “Anatomy of a Linux DNS Lookup – Part V – Two Debug Nightmares”