- TL;DR

- Context

- Writing A 1996 Essay Again in 2023, This Time With Lots More Transistors

- ChatGPT 3

- Gathering the Sources

- PrivateGPT

- Ollama (and Llama2:70b)

- Hallucinations

- What I Learned

TL;DR

I used private and public LLMs to answer an undergraduate essay question I spent a week working on nearly 30 years ago, in an effort to see how the experience would have changed in that time. There were two rules:

- No peeking at the original essay, and

- No reading any of the suggested material, except to find references.

The experience turned out to be radically different with AI assistance in some ways, and similar in others.

If you’re not interested in my life story and the gory detail, skip to the end for what I learned.

Context

Although I work in software now, there was a time when I wanted to be a journalist. To that end I did a degree in History at Oxford. Going up in 1994, I entered an academic world before mobile phones, and with an Internet that was so nascent that the ‘computer room’ was still fighting for space with the snooker table for space next to the library (the snooker table was ejected a few years later, sadly). We didn’t even have phones in our dorms: you had to communicate with fellow students either in person, or via an internal ‘pigeon post’ system.

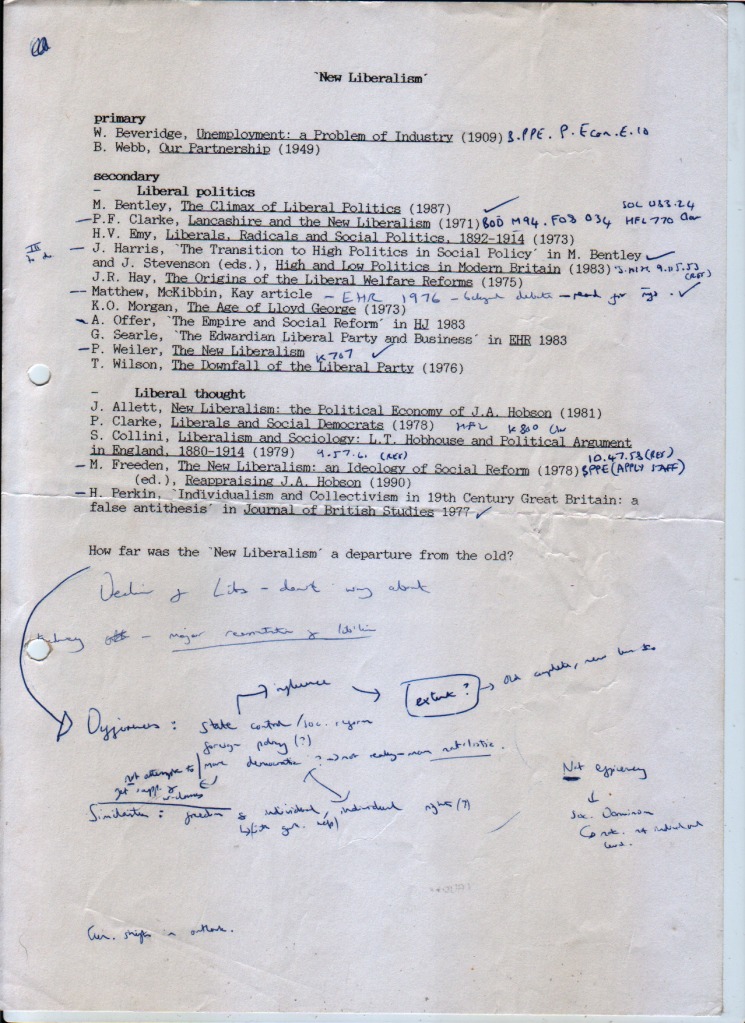

My typical week then was probably similar to a week of a History student in 1964: tutorials were generally on Friday, where I would be given the essay question and reading list for the following week:

That afternoon I would go to several libraries, hoping that other students studying the same module hadn’t borrowed the books on the list. Over the following week I would try to read and make notes on the material as much as possible given my busy schedule of drinking incredibly cheap and nasty red wine, feeling depressed, and suffering from the endless colds living in Oxford (which is built on a bog) gifts you free of charge.

The actual writing of the essay would take place on the day before delivery, and involve a lot of procrastination (solitaire and minesweeper on my Compaq laptop, as I recall) while the painful process of squeezing the juices from my notes took place.

I didn’t go to lectures. They were way too early in the afternoon for me to get out of bed for.

Writing A 1996 Essay Again in 2023, This Time With Lots More Transistors

The essay question I chose to answer was from a General British History (19th-20th century) course:

How far was the New Liberalism a Departure from the Old?

ChatGPT 3

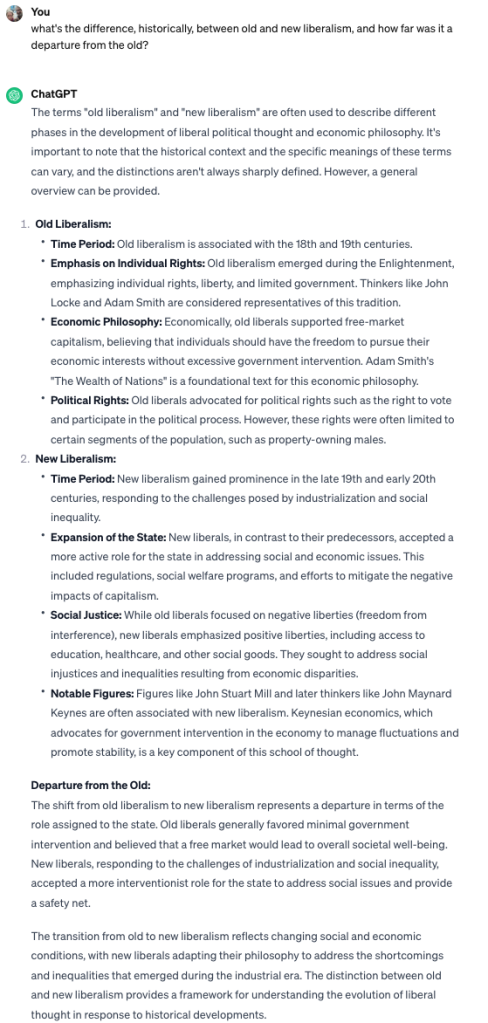

I first went to ChatGPT (3, the free version – I’m a poor student after all) to try and familiarise myself with the topic:

Already I was way ahead of my younger self. I had an idea of what the broad strokes I needed to hit were to get a passable essay together. ChatGPT is great for this kind of surveying or summarizing stuff, but is pretty bad at writing an undergraduate-level essay for you. It can write a passable essay (literally), but it will be very obvious it was written by an LLM, and probably at best get you a 2:2 or a third, due to the broad-brush tone and supine mealy-mouthed ‘judgements’ which contain very little content.

After a few more interactions with ChatGPT over the next few minutes:

I was now ready to gather the information I needed to write the essay.

Gathering the Sources

The first step was to get the materials. It took me a couple of hours to find all the texts (through various, uh, avenues) and process them into plain text. I couldn’t get the journal articles (they were academically paywalled), which was a shame, as they tended to be the best source of figuring out what academic debate was behind the question.

It totalled about 10M of data:

756K high_and_low_politics_in_modern_britain_bentley.txt

1.3M lancashire_and_the_new_liberalism.txt

904K liberalism_and_sociology_collini.txt

1012K liberals_and_social_democrats.txt

812K liberals_radicals_and_social_politics_emy.txt

684K new_liberalism_allett.txt

1.5M our_partnership__beatrice_webb.txt

556K the_age_of_lloyd_george_kenneth_o_morgan.txt

708K the_new_liberalism_freeden.txt

540K the_new_liberalism_weiler.txt

148K the_origins_of_the_liberal_welfare_reforms_hay.txt

1.1M unemployment_a_problem_of_industry_beveridge.txt

Those couple of hours would have been reduced significantly for any future essay I might choose to write, as I spent some time figuring out exactly where to get them and installing software to extract the plain text. So I would now allocate an hour to getting the vast majority of texts, as opposed to the small subset of texts I would hunt down over a significantly longer time in the physical libraries 30 years ago.

PrivateGPT

One of the well-known limitations with ChatGPT is that it doesn’t tell you what the relevant sources are that it looked at to generate the text it gives you.

I found out that this repo gives us a quick way to augment an LLM with a set of documents, so I installed it on an old Dell Xeon desktop with 24 cores and 128G of RAM. This machine cost me about £600 a couple of years ago, and still works a treat (I bought it to run Kubernetes clusters). It doesn’t have a graphics card in it, so I thought it would be usable for this kind of work, but it’s just fine with ‘normal’ CPUs (as long as you don’t demand instant responses).

$ python3 ingest.py

Appending to existing vectorstore at db

Using embedded DuckDB with persistence: data will be stored in: db

Loading documents from source_documents

Loading new documents: 100%|███████████████████| 12/12 [00:00<00:00, 274.84it/s]

Loaded 12 new documents from source_documents

Split into 38046 chunks of text (max. 350 tokens each)

Creating embeddings. May take some minutes…

Ingestion complete! You can now run privateGPT.py to query your documents

Ingestion of the 10M sources took about five minutes. I asked it a few questions, and this was a typical output:

> Question:

what was the changing role of the state in new versus old liberalism

> Answer (took 169.94 s.):

The main difference between "new" and "old" liberalism lies in their approach towards the role of the government within society. While traditional or 'Old' liberals believed that minimizing intervention by the State would lead to a more prosperous economy, ‘New’ Liberals acknowledged the need for state action and social reform as necessary measures against industrial poverty. In essence, new liberalism marked an evolution of thought among liberals towards greater acceptance of government responsibility in promoting general welfare through interventionist policies.

> source_documents/the_age_of_lloyd_george_kenneth_o_morgan.txt:

It was, therefore, the Old Liberalism which helped to repair the fortunes of the party—nonconformity and industrial free trade, the very essence of the Liberal

faith for half a century. Where, however, was the ‘New Liberalism’ which could embrace a more positive attitude towards the central government, for which many

> source_documents/the_new_liberalism_weiler.txt:

In the broadest sense, the term new Liberalism refers both to the welfare legislation of the 1906-14 administration and to the changes in Liberal social and

political theory. At the time, however, the term first referred to the theoretical changes which preceded the legislative achievements of the Liberal government.

> source_documents/the_new_liberalism_weiler.txt:

What then was the new Liberalism? As defined here, it was the modification of Liberal ideology from a vehicle for a mid-Victorian ideal of laissez-faire to a

philosophy based on state action and social reform to cope with industrial poverty. This modification was achieved by Liberal journalists and theorists seeking to

> source_documents/the_new_liberalism_weiler.txt:

state, a development that represents the fruition of the new Liberalism.

New Liberalism

The Political Economy

of J.A. Hobson

UNIVERSITY OF TORONTO PRESS

Toronto Buffalo London

Toronto Buffalo London

Printed in Canada

ISBN 0-8020-5558-3

> source_documents/the_new_liberalism_weiler.txt:

understanding of the phenomenon of the new Liberalism itself.

> source_documents/the_new_liberalism_freeden.txt:

THE SOCIAL POLICY OL THE NEW LIBERALISM

You can see that the output is mixed in quality (the model I used was relatively small, see Ollama section below), but it did point me at potentially relevant sources for quotes and bits to read. I spent about another hour rooting around these texts and gathering bits of text that I might use later. Note the response time, however (about three minutes is typical). I got a bit frustrated with the response time vs quality and went looking for other tech to use.

Ollama (and Llama2:70b)

This was the combination of technology that blew my mind. It absolutely changes the game for this kind of work. Ollama is a project designed to ‘get up and running with large language models locally’ which borrows concepts from Docker (like the Modelfile (similar to the Dockerfile, and ollama get/pull/list)

Compared to the ChatGPT output, Llama2:70b (with a Llama2 model with 70b parameters) allowed me to automate a significant chunk of the work needed to write the essay, reducing the time needed to write the essay from days to less than a day.

I downloaded the biggest model I could find (Llama2:70b) and asked it the question in a very unspecific way. It returned a much better essay plan than ChatGPT did:

At this point I started writing the body of the essay (introductions and conclusions are best left to the end), basing each paragraph on the numbered points above. As I wrote, looked at specific texts to find references and asked LLMs questions, something unexpected happened: I was learning about the nuances of the debate (mainly through looking the Collini book I hadn’t been able to find in the 90s).

I was getting sucked into a temptation to actually read the texts, and had to remind myself that I wasn’t allowed to do that, according to the rules of the ‘game’ I was playing here.

This appreciation of which texts were important, and what I was being invited to write about happened much faster than would have been the case pre-Internet. I really do wonder how students do this today (get in touch if you can tell me!).

Hallucinations

A well-known limitation of LLMs is their tendency to ‘hallucinate’, or ‘bullshit’ a plausible-seeming answer. I definitely saw this, and it wasted some time for me. Llama2 gave me this nice quote, which raised suspicions with me as it seemed too good to be true:

Evidence: In his book "Industrial Democracy" (1902), Webb argues that collective ownership and control of the means of production is necessary to address the systemic issues that perpetuate poverty and inequality. He writes, "The only way to secure industrial democracy is through the collective ownership and control of the means of production."

But I couldn’t find that quote anywhere in the book. Llama2 just made it up.

What I Learned

After writing my AI-assisted essay, I took a look at my original essay from 1996. To my surprise, the older one was far longer than I remembered essays being (about 2500 words to my AI-assisted one’s 1300), and was, it seemed to me, much higher quality than my AI-assisted one. It may have been longer because it was written in my final year, where I was probably at the peak of my essay-writing skills.

It took me about six hours of work (spread over 4 days) to write the AI-assisted essay. The original essay would have taken me a whole week, but the actual number of hours spent actively researching and writing it would have been at minimum 20, and at most 30 hours.

I believe I could have easily doubled the length of my essay to match my old one without reducing the quality of its content. But without actually reading the texts I don’t think I would have improved from there.

I learned that:

- There’s still no substitute for hard study. My self-imposed rule that I couldn’t read the texts limited the quality to a certain point that couldn’t be made up for with AI.

- History students using AI should be much more productive than they were in my day! I wonder whether essays are far longer now than they used to be.

- Private LLMs (I used llama2:70b) can be way more useful than ChatGPT3.5 for this type of work, not only in the quality of generated response, but also the capability to identify relevant passages of text.

- I might have reduced the time significantly with some more work to combine the llama2:70b model with the reference-generating code I had. More research is needed here.

Hope you enjoyed my trip down memory lane. The world of humanities study is not one I’m in any more, but it must be being changed radically by LLMs, just as it must have been by the internet. If you’re in that world now, and want to update me on how you’re using AI, get in touch.

The git repo with essay and various interactions with LLMs is here.

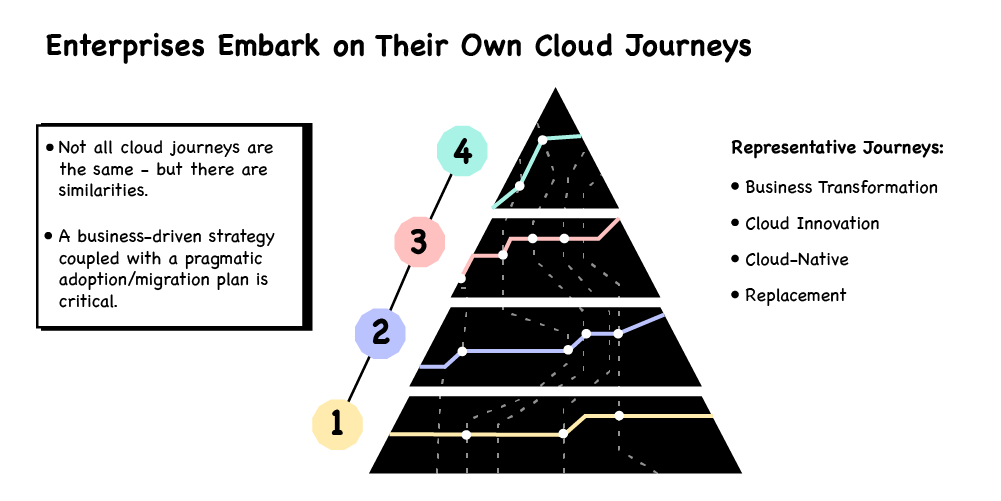

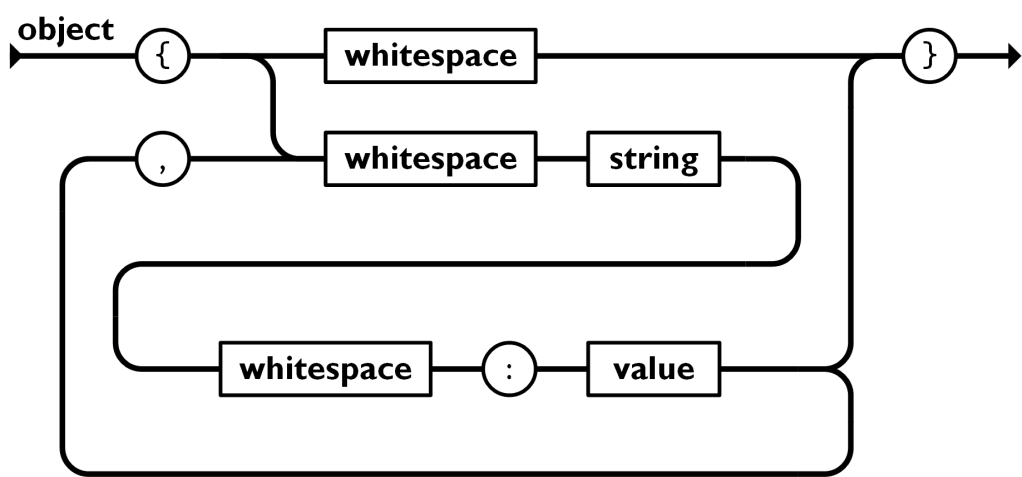

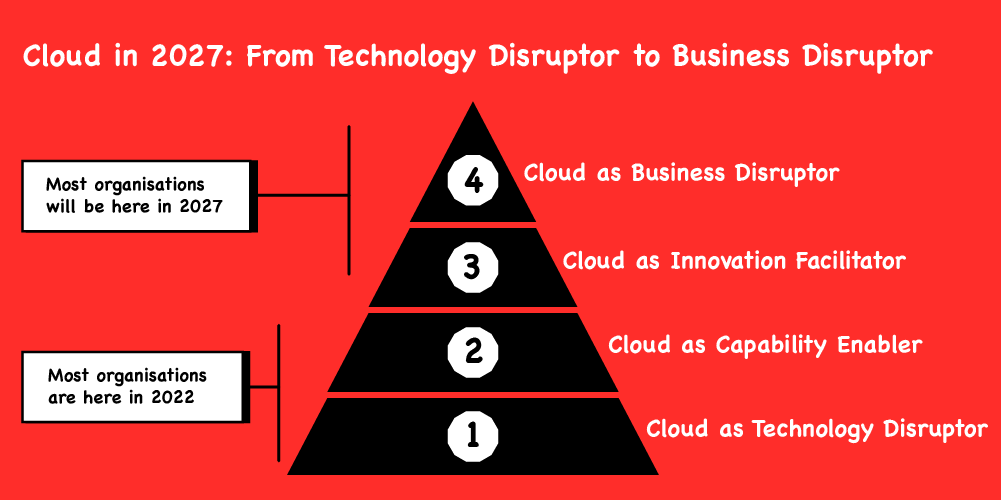

Whilst it is somewhat higher level, this pyramid is similar to our Maturity Matrix in that it helps give you a common visual reference point for a comprehensible and tangible view of both where you are, and where you are trying to get to, in your Cloud Native program. For example, it can help in discussions with technologists to ask them how the changes they are planning relate to stage four. Similarly, when talking to senior leaders about stage four, it can help to clarify whether they and their organisation have thought about the various dependencies below their goal and how they relate to each other.

Whilst it is somewhat higher level, this pyramid is similar to our Maturity Matrix in that it helps give you a common visual reference point for a comprehensible and tangible view of both where you are, and where you are trying to get to, in your Cloud Native program. For example, it can help in discussions with technologists to ask them how the changes they are planning relate to stage four. Similarly, when talking to senior leaders about stage four, it can help to clarify whether they and their organisation have thought about the various dependencies below their goal and how they relate to each other.