Intro

This post walks through a ‘hello world’ GitOps example I use to demonstrate key GitOps principles.

If you’re not aware, GitOps is a term coined in 2017 to encapsulate certain engineering principles that were becoming more common with the advent of recent tooling in the area of software deployment and maintenace.

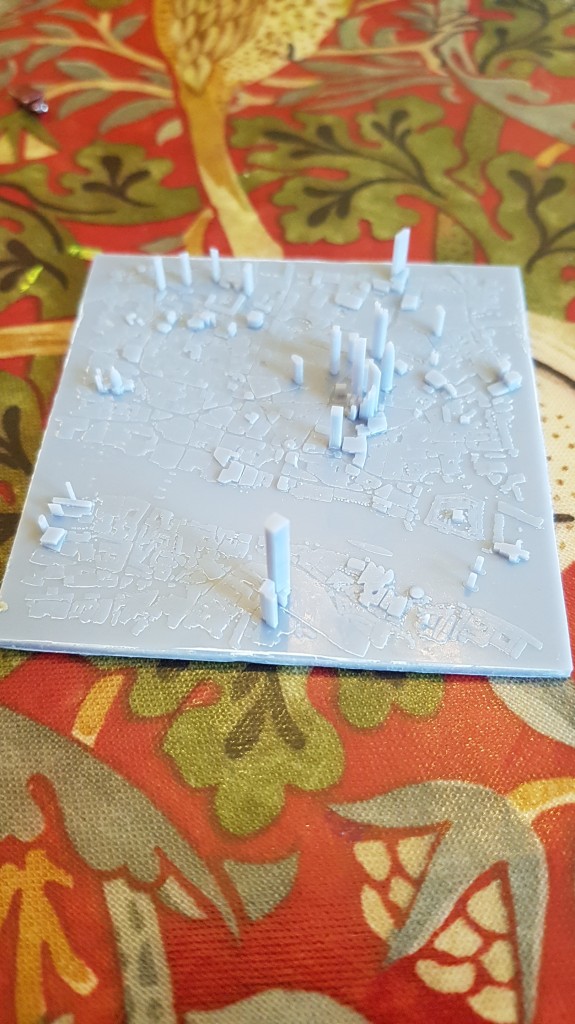

If you want to know more about the background and significance of GitOps, I wrote an ebook on the subject, available for download here from my company. One of the more fun bits of writing that book was creating this diagram, which seeks to show the historical antecedents to the latest GItOps tooling, divided on the three principles of declarative code, source control, and distributed control loop systems.

This post is more a detailed breakdown of one implementation of a trivial application. It uses the following technologies and tools:

- Docker

- Kubernetes

- GitHub

- GitHub Actions

- Shell

- Google Kubernetes Engine

- Terraform

Overview

The example consists of four repositories:

It should be viewed in conjunction with this diagram to get an overview of what’s going on in the example. I’ll be referring to the steps from 0 to 5 in some detail below:

There are three ‘actors’ in this example: a developer (Dev), an operations engineer (Ops), and an Infrastructure engineer (Infra). The Dev is responsible for the application code, the Ops is responsible for deployment, and the Infra is responsible for the platform on which the deployment runs.

The repository structure reflects this separation of concerns. In reality, all roles could be fulfilled by the same person, or there could be even more separation of duties.

Also, the code need not be separated in this way. In theory, just one repository could be used for all four purposes. I discuss these kind of ‘GitOps Decisions’ in my linked post.

If you like this, you might like one of my books:

Learn Bash the Hard Way

Learn Git the Hard Way

Learn Terraform the Hard Way

The Steps

Here’s an overview of the steps outlined below:

- A – Pre-Requisites

- B – Fork The Repositories

- C – Create The Infrastructure

- D – Set Up Secrets And Keys

- D1 – Docker Registry Login Secret Setup

- D2 – Set Up Repository Access Token

- D3 – Install And Set Up FluxCD

- E – Build And Run Your Application

A – Pre-Requisites

You will need:

- A GitHub account

- A Google Cloud account

- A Docker registry account (eg docker.com)

kubectlinstalled to your host. See herefluxctlinstalled to your host. See heregcloudinstalled to your host. See here

B – Fork the Repositories

Fork these three repositories to your own GitHub account:

C – Create the Infrastructure

This step uses the infra repository to create a Kubernetes cluster on which your workload will run, with its configuration being stored in code.

This repository contains nothing in the main branch, and a choice of branches depending on the cloud provider you want to choose.

The best-tested branch is the Google Cloud Provider (gcp) branch, which we cover here.

The code itself consists of four terraform files:

connections.tf- defines the connection to GCP

kubernetes.tf- defines the configuration of a Kubernetes cluster

output.tf- defines the output of the terraform module

vars.tf- variable definitions for the module

To set this up for your own purposes:

- Check out the

gcpbranch of your fork of the code - Set up a Google Cloud account and project

- Log into Google Cloud on the command line:

gcloud auth login- Update components in case they have updated since

gcloudinstall:gcloud components update

- Set the project name

gcloud config set project <GCP PROJECT NAME>

- Enable the GCP container APIs

gcloud services enable container.googleapis.com

- Add a

terraform.tfvarsfile that sets the following items:cluster_name- Name you give your cluster

linux_admin_password- Password for the hosts in your cluster

gcp_project_name- The ID of your Google Cloud project

gcp_project_region- The region in which the cluster should be located, default is

us-west-1

- The region in which the cluster should be located, default is

node_locations- Zones in which nodes should be placed, default is

["us-west1-b","us-west1-c"]

- Zones in which nodes should be placed, default is

cluster_cp_location- Zone for control plane, default is

us-west1-a

- Zone for control plane, default is

- Run

terraform init - Run

terraform plan - Run

terraform apply - Get

kubectlcredentials from Google, eg:gcloud container clusters get-credentials <CLUSTER NAME> --zone <CLUSTER CP LOCATION>

- Check you have access by running

kubectl cluster-info - Create the

gitops-examplenamespacekubectl create namespace gitops-example

If all goes to plan, you have set up a kubernetes cluster on which you can run your workload, and you are ready to install FluxCD. But before you do that, you need to set up the secrets required across the repositories to make all the repos and deployments work together.

D – Set Up Secrets And Keys

In order to co-ordinate the various steps in the GitOps workflow, you have to set up three sets of secrets in the GitHub repositories. This is to allow:

- The Kubernetes cluster to log into the Docker repository you want to pull your image from

- The

github-example-app‘s repository action to update the image identifier in thegithub-example-deployrepository - Allow

fluxcdto access thegitops-example-deployGitHub repository from the Kubernetes cluster

D1. Docker Registry Login Secret Setup

To do this you create two secrets in the gitops-example-app repository at the link:

https://github.com/<YOUR GITHUB USERNAME>/gitops-example-app/settings/secrets/actions

DOCKER_USER- Contains your Docker registry username

DOCKER_PASSWORD- Contains your Docker registry password

Next, you set up your Kubernetes cluster so it has these credentials.

- Run this command, replacing the variables with your values:

kubectl create -n gitops-example secret docker-registry regcred --docker-server=docker.io --docker-username=$DOCKER_USER --docker-password=$DOCKER_PASSWORD --docker-email=$DOCKER_EMAIL

D2. Set up Repository Access Token

To do this you first create a personal access token in GitHub.

- You can do this by visiting this link. Once there, generate a token called

EXAMPLE_GITOPS_DEPLOY_TRIGGER.

- Give the token all rights on

repo, so it can read and write to private repositories.

- Copy that token value into a secret with the same name (

EXAMPLE_GITOPS_DEPLOY_TRIGGER) in yourgitops-example-appat:

https://github.com/<YOUR GITHUB USERNAME>/gitops-example-app/settings/secrets/actions

D3 – Install And Set Up FluxCD

Finally, you set up flux in your Kubernetes cluster, so it can read and write back to the gitops-example-deploy repository.

- The most up-to-date FluxCD deployment instructions can be found here, but this is what I run on GCP to set up FluxCD on my cluster:

kubectl create clusterrolebinding "cluster-admin-$(whoami)" --clusterrole=cluster-admin --user="$(gcloud config get-value core/account)"

kubectl create ns flux

fluxctl install --git-branch main --git-user=<YOUR GITHUB USERNAME> --git-email=<YOUR GITHUB EMAIL> --git-url=git@github.com:<YOUR GITHUB USERNAME>/gitops-example-deploy --git-path=namespaces,workloads --namespace=flux | kubectl apply -f -

- When the installation is complete, this command will return a key generated by FluxCD on the cluster:

fluxctl identity --k8s-fwd-ns flux

- You need to take this key, and place it in the

gitops-example-deployrepository at this link:

https://github.com/<YOUR GITHUB USERNAME>/gitops-example-deploy/settings/keys/new

- Call the key

flux - Tick the ‘write access’ option

- Click ‘Add Key’

You have now set up all the secrets that need setting up to make the flow work.

You will now make a change and will follow the links in the steps as the application builds and deploys without intervention from you.

E – Build And Run Your Application

To deploy your application, all you need to do is make a change to the application in your gitops-example-app repository.

- Step 1a, 2 and 3

Go to:

https://github.com/<YOUR GITHUB USERNAME>/gitops-example-app/blob/main/Dockerfile

and edit the file, changing the contents of the echo command to whatever you like, and commit the change, pushing to the repository.

This push (step 1a above) triggers the Docker login, build and push via a GitHub action (steps 2 and 3), which are specified in code here:

https://github.com/ianmiell/gitops-example-app/blob/main/.github/workflows/main.yaml#L13-L24

This action uses a couple of docker actions (docker/login-action and docker/push-action) to commit and push the new image with a tag of the github SHA value of the commit. The SHA value is given to you as a variable by GitHub Actions (github.sha) within the action’s run. You also use the DOCKER secrets set up earlier. Here’s a snippet:

- name: Log in to Docker Hub

uses: docker/login-action@f054a8b539a109f9f41c372932f1ae047eff08c9

with:

username: ${{secrets.DOCKER_USER}}

password: ${{secrets.DOCKER_PASSWORD}}

- name: Build and push Docker image

id: docker_build

uses: docker/build-push-action@ad44023a93711e3deb337508980b4b5e9bcdc5dc

with:

context: .

push: true

tags: ${{secrets.DOCKER_USER}}/gitops-example-app:${{ github.sha }}- Step 4

Once the image is pushed to the Docker repository, another action is called which triggers another action that updates the gitops-example-deploy Git repository (step 4 above)

- name: Repository Dispatch

uses: peter-evans/repository-dispatch@v1

with:

token: ${{ secrets.EXAMPLE_GITOPS_DEPLOY_TRIGGER }}

repository: <YOUR GITHUB USERNAME>/gitops-example-deploy

event-type: gitops-example-app-trigger

client-payload: '{"ref": "${{ github.ref }}", "sha": "${{ github.sha }}"}'It uses the Personal Access Token secrets EXAMPLE_GITOPS_DEPLOY_TRIGGER created earlier to give the action the rights to update the repository specified. It also passes in an event-type value (gitops-example-app-trigger) so that the action on the other repository knows what to do. Finally, it passes in a client-payload, which contains two variables: the github.ref and the github.sha variables made available to us by the GitHub Action.

This configuration passes all the information needed by the action specified in the gitops-example-deploy repository to update its deployment configuration.

The other side of step 4 is the ‘receiving’ GitHub Action code here:

https://github.com/ianmiell/gitops-example-deploy/blob/main/.github/workflows/main.yaml

Among the first lines are these:

on:

repository_dispatch:

types: gitops-example-app-triggerWhich tell the action that it should be run only on a repository dispatch, when the event type is called gitops-example-app-trigger. Since this is what we did on the push to the gitops-example-app action above, this should be the action that’s triggered on this gitops-example-deploy repository.

The first thing this action does is check out and update the code:

- name: Check Out The Repository

uses: actions/checkout@v2

- name: Update Version In Checked-Out Code

if: ${{ github.event.client_payload.sha }}

run: |

sed -i "s@\(.*image:\).*@\1 docker.io/${{secrets.DOCKER_USER}}/gitops-example-app:${{ github.event.client_payload.sha }}@" ${GITHUB_WORKSPACE}/workloads/webserver.yamlIf a sha value was passed in with the client payload part of the github event, then a sed is performed, which updates the deployment code. The workloads/webserver.yaml Kubernetes specification code is updated by the sed command to reflect the new tag of the Docker image we built and pushed.

Once the code has been updated within the action, you commit and push using the stefanzweifel/git-auto-commit-action action:

- name: Commit The New Image Reference

uses: stefanzweifel/git-auto-commit-action@v4

if: ${{ github.event.client_payload.sha }}

with:

commit_message: Deploy new image ${{ github.event.client_payload.sha }}

branch: main

commit_options: '--no-verify --signoff'

repository: .

commit_user_name: Example GitOps Bot

commit_user_email: <AN EMAIL ADDRESS FOR THE COMMIT>

commit_author: <A NAME FOR THE COMMITTER> << AN EMAIL ADDRESS FOR THE COMMITTER>>- Step 5

Now the deployment configuration has been updated, we now wait for FluxCD to notice the change in the Kubernetes deployment configuration. After a few minutes, the Flux controller will notice that the main branch of the gitops-example-deploy repository has changed, and try the apply the yaml configuration in that repository to the Kubernetes cluster. This will update the workload.

If you port-forward to the application’s service, and hit it using curl or your browser, you should see that the application’s output has changed to whatever you committed above.

And then you’re done! You’ve created an end-to-end GitOps continuous delivery pipeline from code to cluster that requires no intervention other than a code change!

Cleanup

Don’t forget to terraform destroy your cluster, to avoid incurring a large bill with your cloud provider!

Lessons Learned

Even though this is as simple an example I could make, you can see that it involves quite a bit of configuration and setup. If anything went wrong in the pipeline, you’d need to understand quite a lot to be able to debug it and fix it.

In addition, there are numerous design decisions you need to make to get your GitOps workflow working for you. Some of these are covered in my previous GitOps Decisions post.

Anyone who’s coded anything will know that there is this tax on the benefits of automation. It should not be underestimated by any team looking to take up this deployment methodology.

On the other hand, once the mental model of the workflow is internalised by a team, significant savings and improvements to delivery are seen.

If you like this, you might like one of my books:

Learn Bash the Hard Way

Learn Git the Hard Way

Learn Terraform the Hard Way

If you enjoyed this, then please consider buying me a coffee to encourage me to do more.